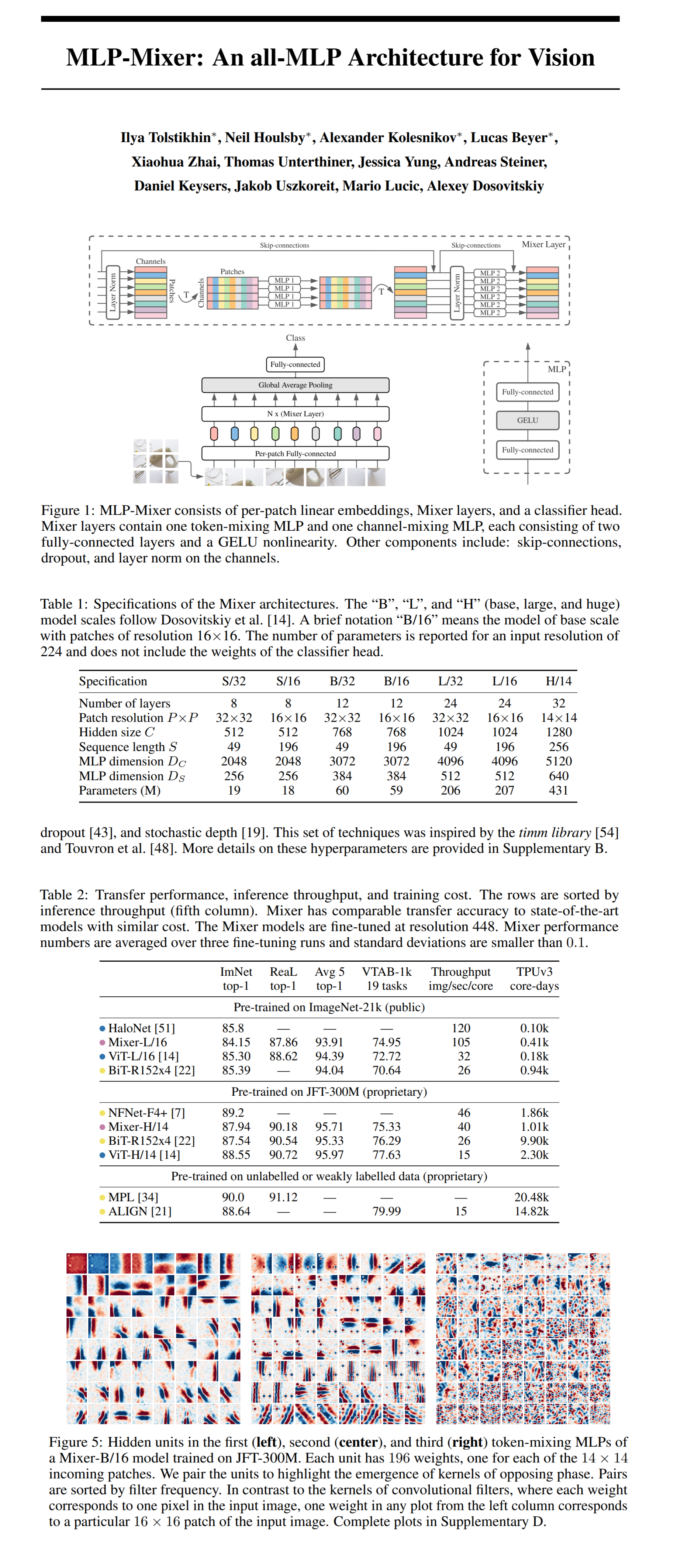

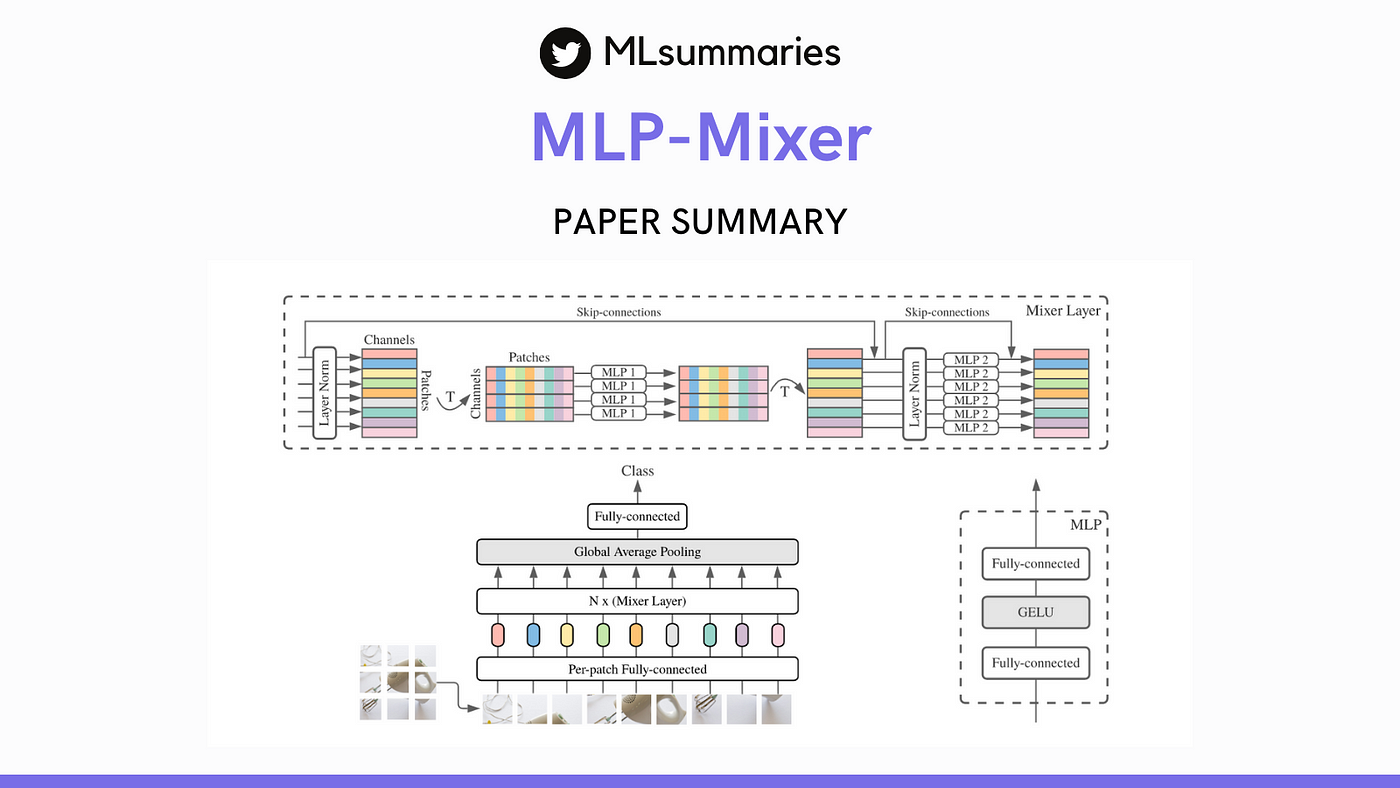

MLP-Mixer: An all-MLP Architecture for Vision — Paper Summary | by Gowthami Somepalli | ML Summaries | Medium

AK on X: "RaftMLP: Do MLP-based Models Dream of Winning Over Computer Vision? pdf: https://t.co/gZF22TVnnZ abs: https://t.co/2Wr0rtSu0Z github: https://t.co/AxBFNk1Qsj raft-token-mixing block improves accuracy when trained on the ImageNet-1K dataset ...

GitHub - sayakpaul/MLPMixer-jax2tf: This repository hosts code for converting the original MLP Mixer models (JAX) to TensorFlow.

![P] MLP-Mixer-Pytorch: Pytorch reimplementation of Google's MLP-Mixer model that close to SotA using only MLP in image classification task. : r/MachineLearning P] MLP-Mixer-Pytorch: Pytorch reimplementation of Google's MLP-Mixer model that close to SotA using only MLP in image classification task. : r/MachineLearning](https://external-preview.redd.it/_Nwm_-IrNRlNST_hhDPtu5ntyZDXeerdhSBfo3hdgos.jpg?auto=webp&s=a4ffbe00799f72319b85bd5dc729a3c188ee3229)

P] MLP-Mixer-Pytorch: Pytorch reimplementation of Google's MLP-Mixer model that close to SotA using only MLP in image classification task. : r/MachineLearning

GitHub - bangoc123/mlp-mixer: Implementation for paper MLP-Mixer: An all-MLP Architecture for Vision

AK on X: "A Generalization of ViT/MLP-Mixer to Graphs abs: https://t.co/wRr5Vsf5eS github: https://t.co/JKDMi4tBin https://t.co/ze1TXO1vsK" / X

Yannic Kilcher 🇸🇨 on X: "🔥Short Video🔥MLP-Mixer by @GoogleAI already has about 20 GitHub implementations in less than a day. An only-MLP network reaching competitive ImageNet- and Transfer-Performance due to smart weight

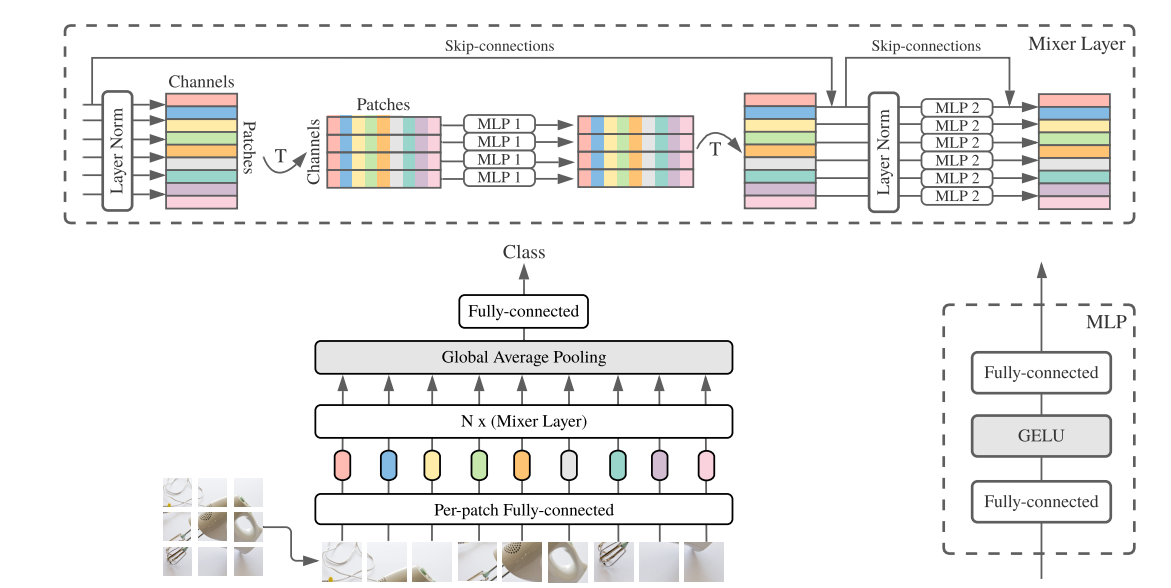

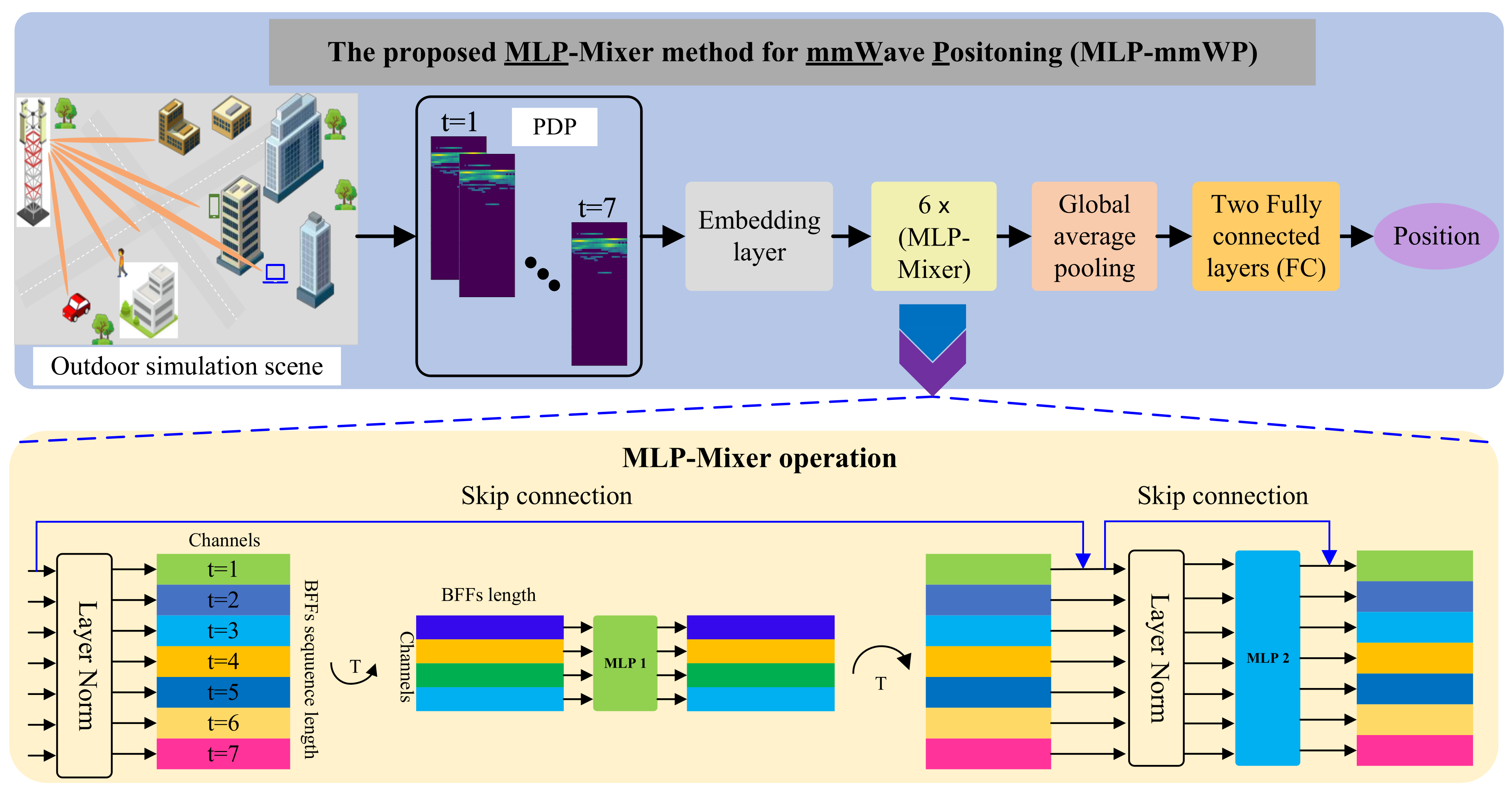

a) is the MLP-Mixer architecture (formulas 1 and 2). (b) is the TGMLP... | Download Scientific Diagram

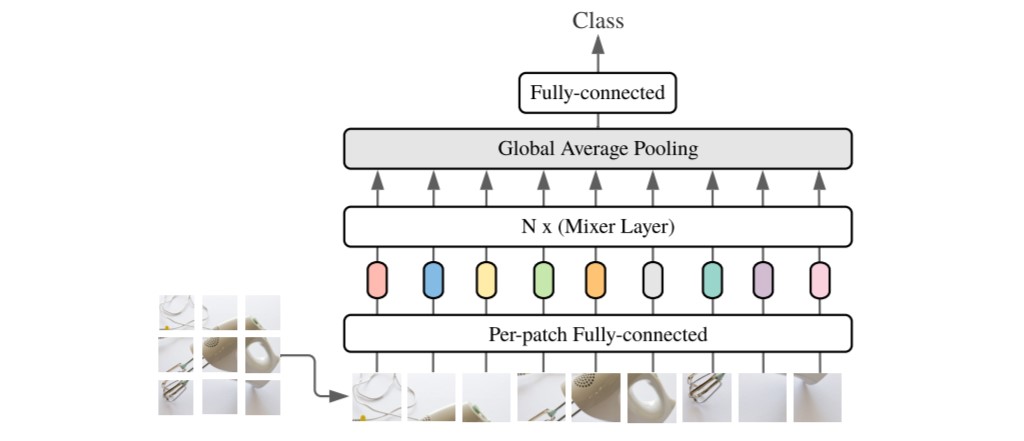

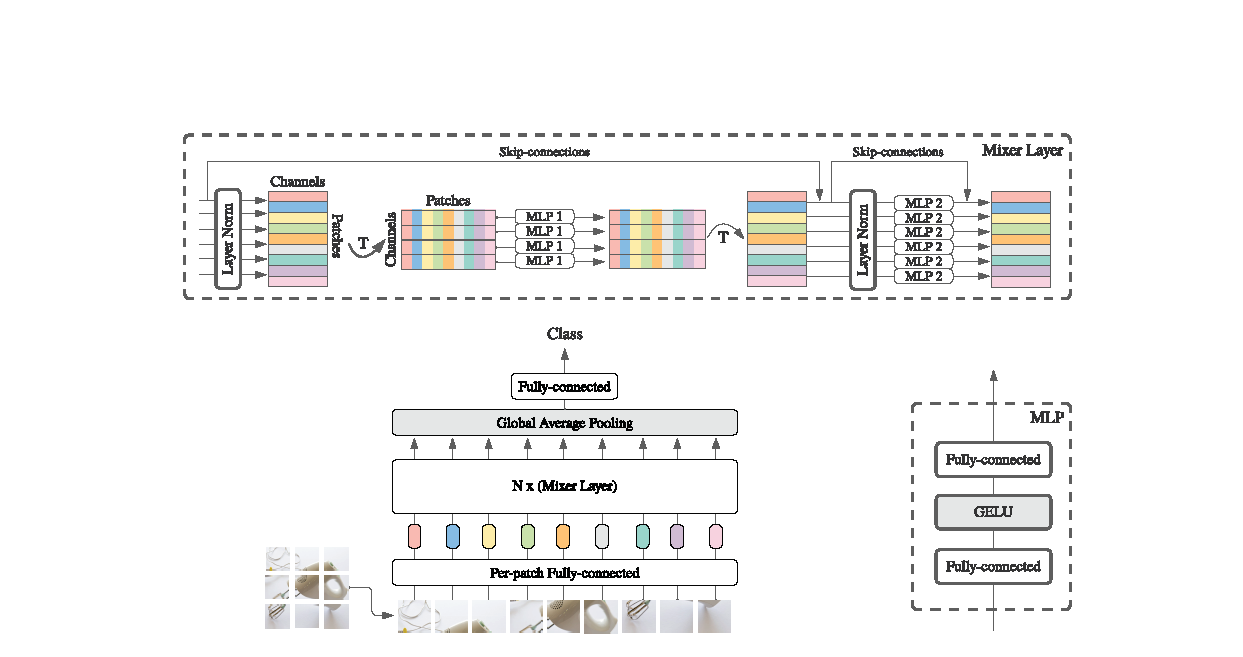

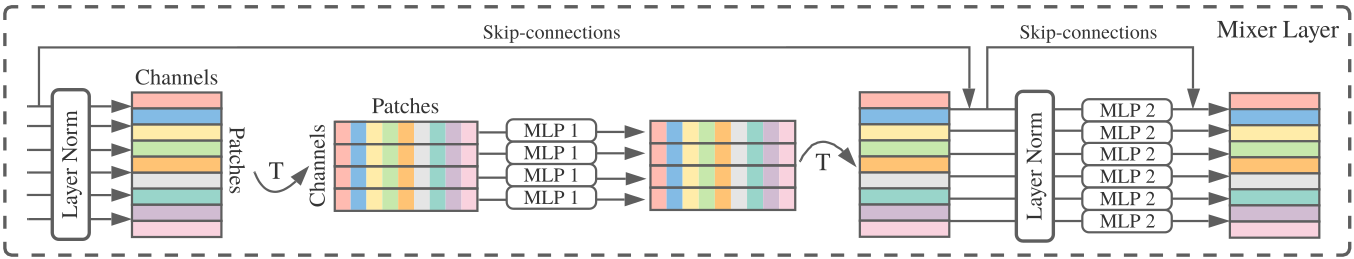

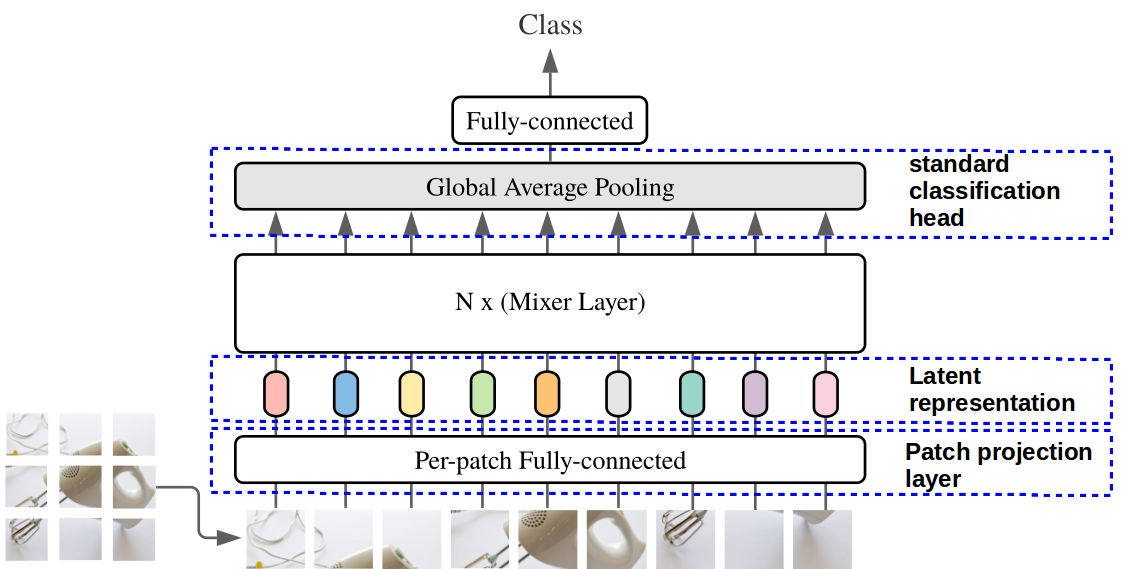

![Research 🎉] MLP-Mixer: An all-MLP Architecture for Vision - Research & Models - TensorFlow Forum Research 🎉] MLP-Mixer: An all-MLP Architecture for Vision - Research & Models - TensorFlow Forum](https://discuss.tensorflow.org/uploads/default/optimized/2X/d/d18627debab539a6f795ebcabfa65faacb00bab8_2_1024x715.png)